Service Overview

The latest application security report points out that sources of website requests are changing gradually. Any traffic from non-human requests is referred to as machine traffic. Although there are negative associations to the phrase machine traffic, it really depends on the purposes of these machines; some examples of useful machine traffic include search engines and voice assistants. Most enterprises welcome useful machine traffic.

On the other hand, undesired machine behaviors include fake certificates, information theft, web scraping, service disruption attacks, or unauthorized crawling before launching a click fraud. We can categorize these machine behaviors into 4 groups: 46% are headless browsers that are used mainly by quality tests in a browser-like environment that controls website actions with automation. Another 23% are humanized bots that use normal web browsers and simulate mouse movements and keyboard typing but are still easily recognized. Another category is called script bots and they amount to 16% of all undesired machines. They are made with script tools and can send cURL-like requests from just a few IP addresses. But they cannot save any cookie or execute JavaScript and do not have features of a web browser.

Finally, the rest of 15% are distributed bots that have the most advanced humanoid interactive abilities. They can move a cursor randomly and create very human-like behavior, which makes them very difficult to be recognized.

Akamai Bot Manager offers a flexible framework to better manage the large number of crawlers that visit your website every day. It can identify crawlers that visit for the first time, categorize different types of crawlers and take appropriate management actions against particular crawler types. Your business can then better control how your website interacts with all types of crawlers in order to optimize your business profit and reduce the impact of these crawlers on your business or IT.

Features

- Intelligent Analysis

Akamai continuously updates the directory tree that its crawlers generate using cloud security intelligence (CSI) big data analysis. This directory tree currently contains 17 main categories of a total of 1400 common crawlers.

- Unknown Crawler Detection

Akamai WAP uses several technologies to detect traffic from unknown crawlers. These technologies include user behavior analysis, browser fingerprint recognition, automatic browser detection, HTTP abnormality detection, and high request rate.

- Machine Learning

Akamai WAP’s machine learning ability is used in automatically updating how it recognizes traits and behavior of crawlers, e.g. a crawler’s model of behavior and its reputation rating on the Akamai Platform.

- Self-Defined Management

You may create signatures for self-defined crawlers to manage those crawlers that are already known to you. You may also assign different operations to different types of crawlers according to their effect on your business and IT infrastructure.

Akamai’s WAP offers different operations on different types of crawlers, including notifications, interception, delay, alternative content and more. In addition, you may also choose different actions according to the value of URL, specific timeframe or split traffic.

- Mobile App Protection

Akamai protects your native mobile apps and API’s against automatic attacks such as credential stuffing attacks. You may connect native mobile apps to Akamai’s crawler detection by using Akamai Bot Manager’s mobile SDK.

- Reports and Analysis

The dashboard provides high visibility of crawlers in analysis charts, which will help you quickly understand the models and methods of attacks. Bot Manager’s ability to integrate with other security solutions and with SIEM platforms offers you even richer information about events relevant to your cybersecurity.

Implementation Steps

- Step 1: Apply for Akamai Resources

You need to provide the following information to apply for Akamai resources:

- Current peak volume value and average traffic volume on your website

- Contact person, including first name, last name, an email and a phone number

- The FQDN and IP of your website to be optimized, e.g. bot.wingwill.com.tw

- Step 2: Apply for Website Credential, Set Up Platform, and Publish

You will need to provide your website credential to finish your application. Then, we will set up and publish your setting to Akamai’s network. You will need to add a new FQDN and IP, e.g. orig-dsa.wingwill.com.tw, for inquiries from Akamai Edge Server.

- Step 3: Change DNS Pointing to Your Site and Direct Traffic to CDN Platform

We will replace your DNS A records with CNAME pointing to an Akamai Edge Server FQDN, e.g. wingwill.com.tw.edgekey.net

- Step 4: Analyze Traffic Volume & Security Events and Adjust

Akamai provides comprehensive analysis reports on your traffic volume and cybersecurity events. Akamai’s Security Center reporting and analysis tools get your business notified and gather important data such as critical time points, affected scope, and attack methods for you so you can react to security incidents immediately. These build-in reports help your business understand user inquiry behavior, traffic status and site content optimization.

Is This for My Business?

- I want to reduce the impact of undesired machine behaviors on my services.

- I want to react to and manage both known and unknown crawler and bot behaviors.

- I want to filter crawler behavior to avoid theft of my digital property for profit.

Service Architecture

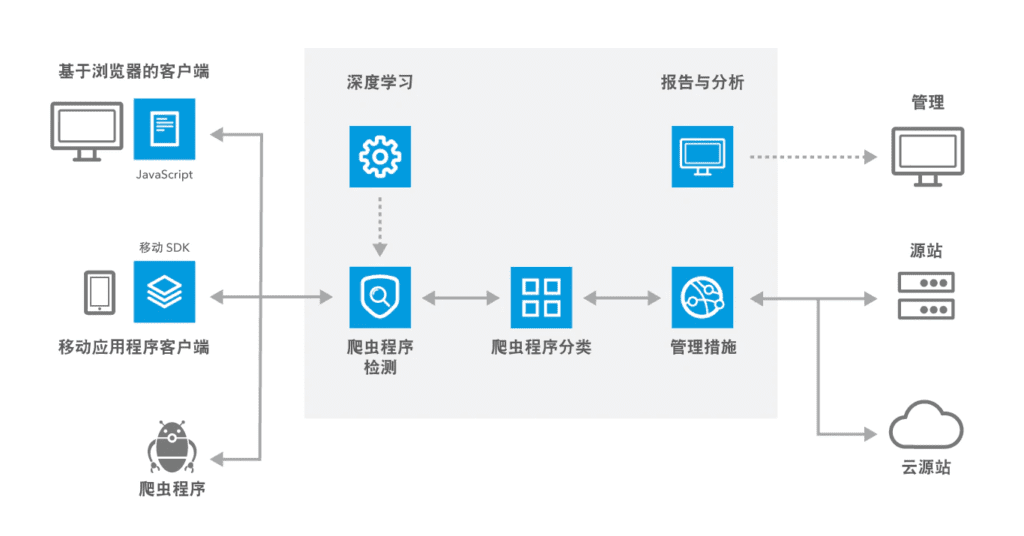

Bot Manager uses Akamai Intelligence Platform to identify, categorize, manage and report crawler traffic.

Working principles of Bot Manager